Your Document Assistant - Local RAG Powered by Llama 3 8B

What is RAG?

Retrieval-Augmented Generation (RAG) has gained significant attention with the rise of large language models (LLMs). RAG enables LLMs to access external and internal knowledge sources. It helps data professionals to retrieve relevant information from vast data sources and even summarize complex datasets into actionable knowledge.

Key features

This project combines Llama 3 8B with the Langchain framework to create a flexible, local solution for interacting with documents. It’s designed to help professionals efficiently retrieve and analyze document information, while maintaining data privacy by running the LLM locally.

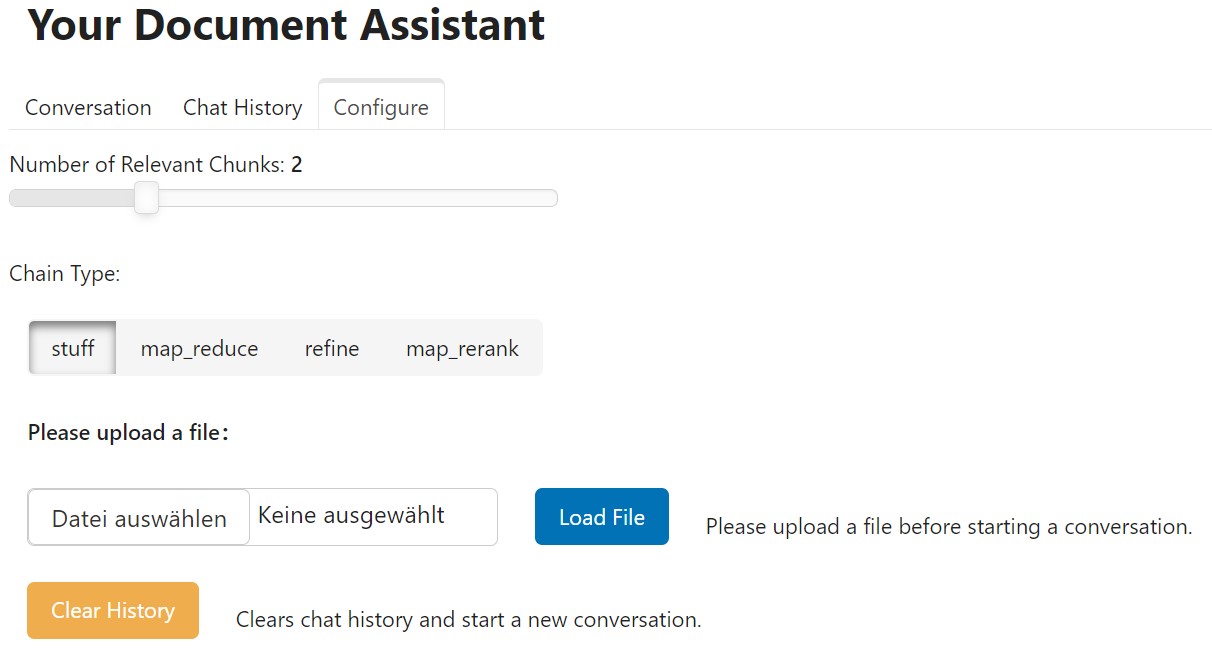

Customizable Chain Type

The project allows users to choose between different chain types, such as stuff, map-reduce, refine, and map-rerank, depending on the use case. This flexibility tailors the experience to specific tasks.

- Stuff: Simple and ideal for small sets of short documents.

- Map-Reduce: Suitable for larger documents.

- Refine: Good for complex queries that require nuanced information integration.

- Map-Rerank: Best when relevance ranking is critical for obtaining the best results, but can be more computationally expensive.

Adjustable Number of Relevant Chunks

Users can set how many chunks to retrieve, allowing them to control the amount of context the model retrieves for each query. This is crucial for balancing relevance and performance.

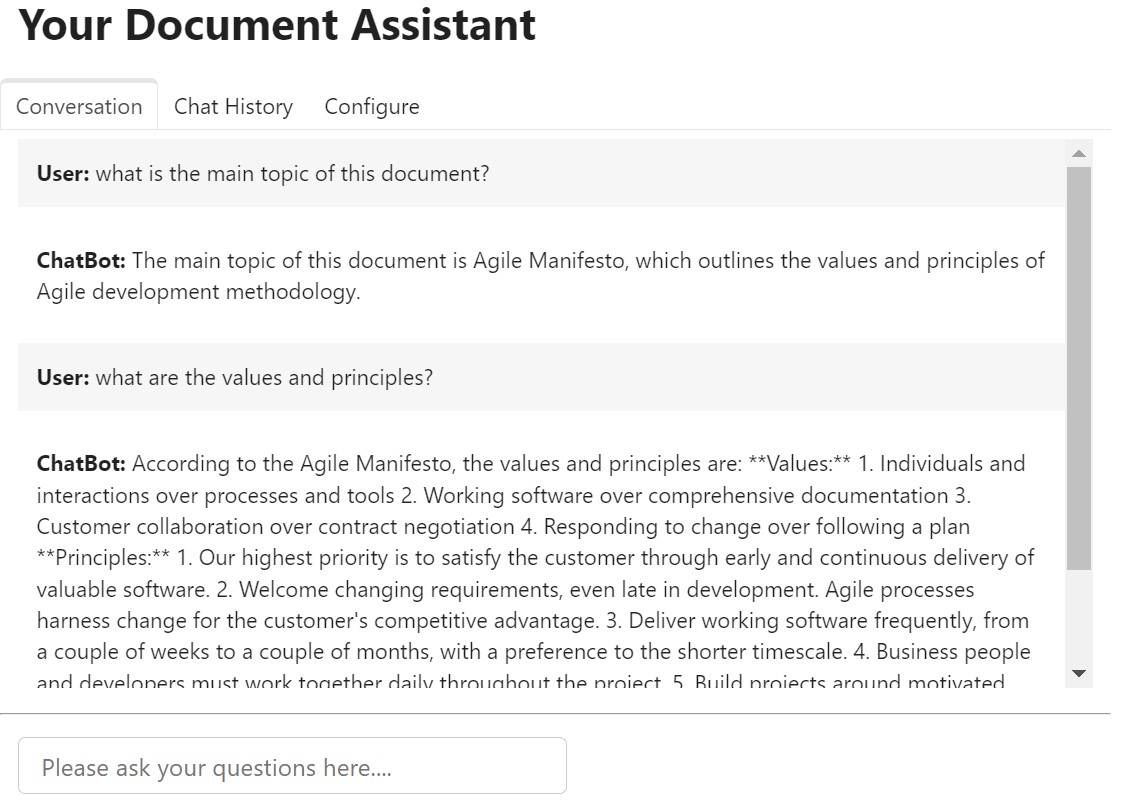

Document Interaction

Users can interact with the chatbot, named "Your Document Assistant", after loading a PDF file. The chatbot has memory capabilities, allowing it to "remember" previous queries and answers, thus enabling a continuous conversation. For users wishing to start a new conversation, there is an option to clear the conversation history and begin fresh.

Code Implementation

Here’s a brief overview of the code used to implement key features within a JupyterLab environment. The full implementation can be found here.

def call_load_db(self, count, k, chain_type):

if file_input.value is None: # check if the file is loaded

return pn.pane.Markdown("Please upload a file before starting a conversation.")

file_input.save("temp.pdf") # save the file

self.loaded_file = file_input.filename # add file name

button_load.button_style = "outline"

#get the k and chain type values

self.k_value = k

self.chain_type_value = chain_type

self.qa = load_db("temp.pdf", self.chain_type_value, self.k_value)

self.file_loaded = True

button_load.button_style="solid"

self.clr_history()

return pn.pane.Markdown(f"Loaded File: {self.loaded_file}")